User logs of Hadoop jobs serve multiple purposes. First and foremost, they can be used to debug issues while running a MapReduce application – correctness problems with the application itself, race conditions when running on a cluster, and debugging task/job failures due to hardware or platform bugs. Secondly, one can do historical analyses of the logs to see how individual tasks in job/workflow perform over time. One can even analyze the Hadoop MapReduce user-logs using Hadoop MapReduce(!) to determine any performance issues.

Handling of user-logs generated by applications has been one of the biggest pain-points for Hadoop installations in the past. In Hadoop 1.x, user-logs are left on individual nodes by the TaskTracker, and the management of the log files on local nodes is both insufficient for longer term analyses as well as non-deterministic for user access. YARN tackles this logs’ management issue by having the NodeManagers (NMs) provide the option of moving these logs securely onto a distributed file-system (FS), for e.g. HDFS, after the application completes.

Motivation

In Hadoop 1.x releases, MapReduce is the only programming model available for users. Each MapReduce job runs a bunch of mappers and reducers. Every map/reduce task generates logs directly on the local disk – syslog/stderr/stdout and optionally profile.out/debug.out etc. These task logs are accessible through the web UI, which is the only convenient way to view them – login into individual nodes to inspect logs is both cumbersome and sometimes not possible due to access restrictions.

State of the log-management art in Hadoop 1.x

For handling the users’ task-logs, the TaskTrackers (TTs) makes use of a UserLogManager. It is composed of the following components.

- UserLogCleaner: Cleans logs of a given job after retaining them for a certain time starting from the job-finish. The configuration property mapred.userlog.retain.hours dictates this time, defaulting to one day. It adds every job’s user-log directory to a cleanup thread to delete them after user-log retain-hours. This thread wakes up every so often to delete any old logs that have hit their retention interval.

- On top of that, all logs in ${mapred.local.dir}/userlogs are added to the UserLogCleaner once a TT restarts.

- TaskLogsTruncater: This component truncates every log file beyond a certain length after a task finishes. The configuration properites mapreduce.cluster.map.userlog.retain-size and

mapreduce.cluster.reduce.userlog.retain-size control how much of the userlog is to be retained. The assumption here is that when something goes wrong with a task, the tail of the log should generally indicate what the problem is and so we can afford to lose the head of the log when the log grows beyond some size. - mapred.userlog.limit.kb is another solution that predates the above: While a task JVM is running, its stdout and stderr streams are piped to the unix tail program which only retains the specified log size and writes them to stdout/stderr files.

There were a few more efforts that didn’t make to production

- Log collection: a user invokable LogCollector that runs on client/gateway machines and collects per-job logs into a compact format.

- Killing of running tasks exceeding N GB of logs because otherwise a run-away task can fill up the disk consisting logs, causing downtime.

Problems with existing log management:

- Truncation: Users complain about truncated logs more often than that. Few users need to access the complete logs. No limit really satisfies all the users – 100KB works for some, but not so much for others.

- Run-away tasks: TaskTrackers/DataNodes can still go out of disk space due to excessive logging as truncation only happens after tasks finish.

- Insufficient retention: Users complain about the log-retention time. No admin configured limit satisfies all the users – the default of one day works for many users but many gripe about the fact that they cannot do post analysis.

- Accesss: Serving logs over HTTP by the TTs is completely dependent on the job finish time and the retention time – not perfectly reliable.

- Collection status: The same non-reliability with a log-collector tool – one needs to build more solutions to detect when the log-collection starts and finishes and when to switch over to such collected logs from the usual files managed by TTs.

- mapred.userlog.limit.kb increases memory usage of each task (specifically with lots of logging), doesn’t work with more generic application containers supported by YARN.

- Historical analysis: All of these logs are served over HTTP only as long as they exist on the local node – if users want to do post analysis, they will have to employ some log-collection and build more tooling around that.

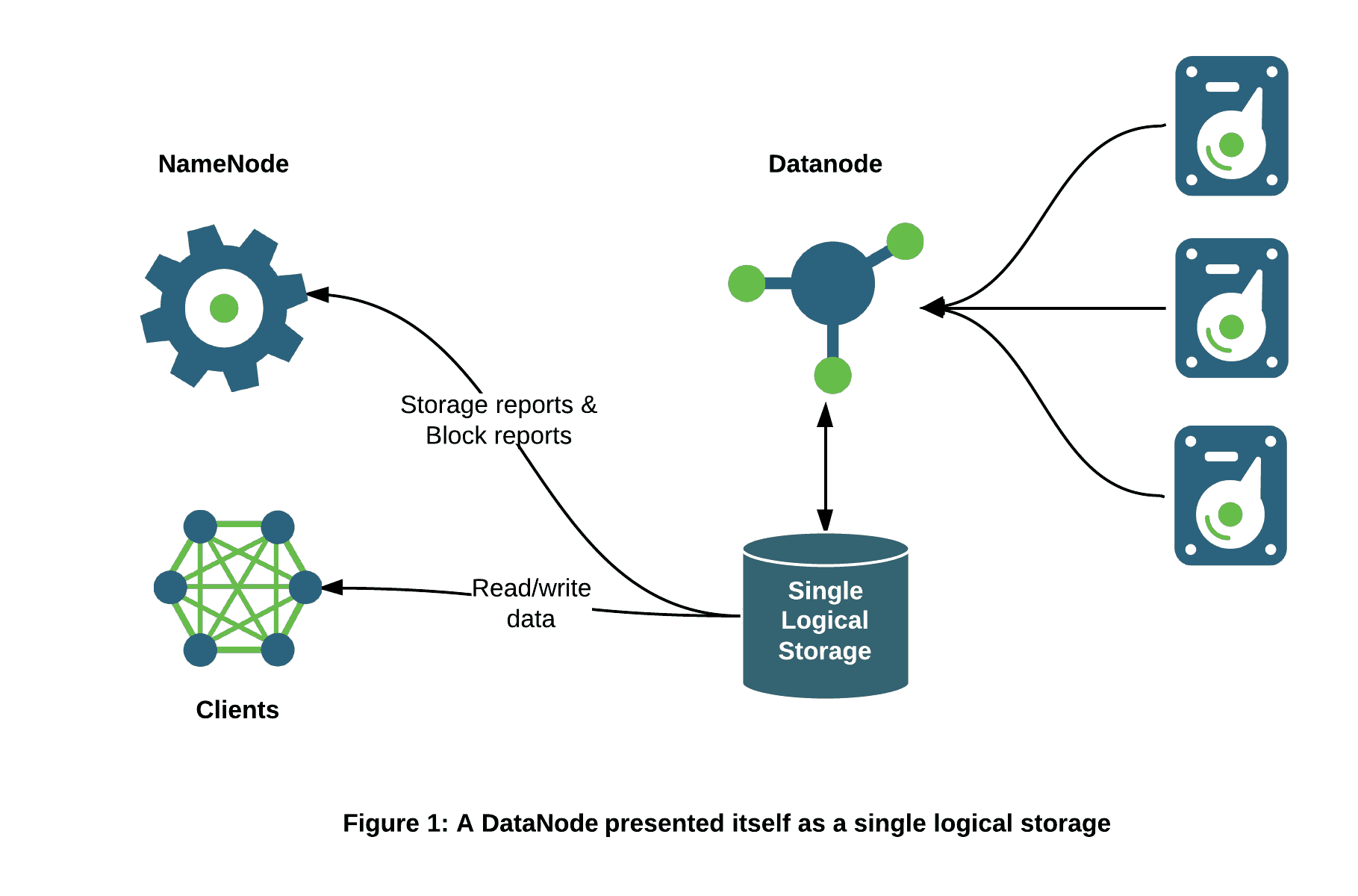

- Load-balancing & SPOF: All the logs are written to a single log-directory – no load balancing across disks & if that one disk is down, all jobs lose all logs.

Admitting that existing solutions were only really stop-gap to a more fundamental problem, we took the opportunity to do the right thing by enabling in-platform log aggregation.

Log-aggregation in YARN: Details

So, instead of truncating user-logs, and leaving them on individual nodes for certain time as done by the TaskTracker, the NodeManager addresses the logs’ management issue by providing the option to move these logs securely onto a file-system (FS), for e.g. HDFS, after the application completes.

- Logs for all the containers belonging to a single Application and that ran on a given NM are aggregated and written out to a single (possibly compressed) log file at a configured location in the FS.

- In the current implementation, once an application finishes, one will have

- an application level log-dir and

- a per-node log-file that consists of logs for all the containers of the application that ran on this node.

- Users have access to these logs via YARN command line tools, the web-UI or directly from the FS – no longer just restricted to a web only interface.

- These logs potentially can be stored for much longer times than was possible in 1.x, given they are stored a distributed file-system.

- We don’t need to truncate logs to very small lengths – as long as the log sizes are reasonable, we can afford to store the entire logs.

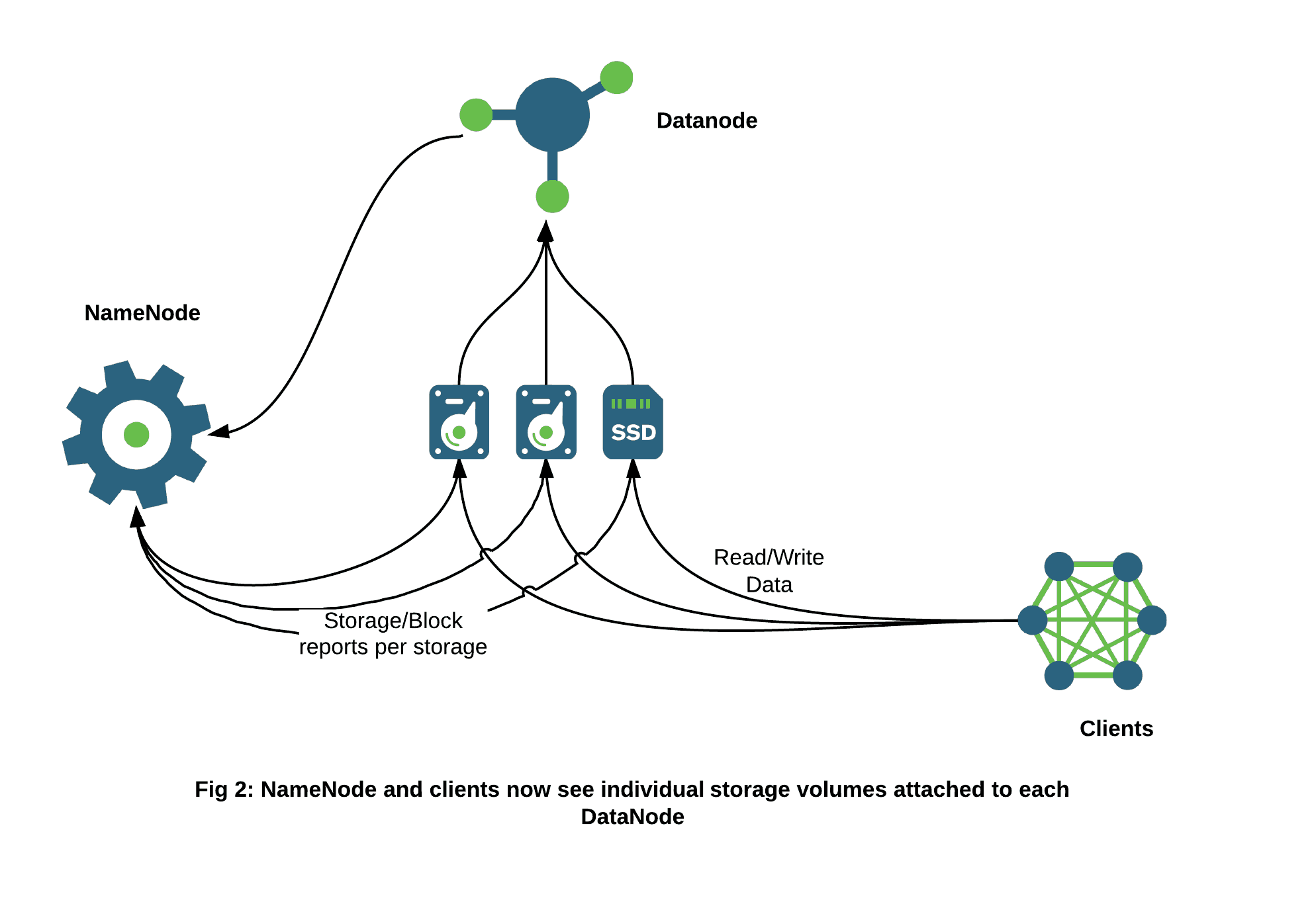

- In addition to that, while the containers are running, the logs are now written to multiple directories on each node for effective load balancing and improved fault tolerance.

- AggregatedLogDeletionService is a service that periodically deletes aggregated logs. Today it is run inside the MapReduce JobHistoryServer only.

Usage

Web UI

On the web interfaces, the fact that logs are aggregated is completely hidden from the user.

- While a MapReduce application is running, users can see the logs from the ApplicationMaster UI which redirects to the NodeManager UI

- Once an application finishes, the completed information is owned by the MapReduce JobHistoryServer which again serves user-logs transparently.

- For non-MapReduce applications, we are working on a generic ApplicationHistoryServer that does the same thing.

Command line access

In addition to the web-UI, we also have a command line utility to interact with logs.

$ $HADOOP_YARN_HOME/bin/yarn logs

Retrieve logs for completed YARN applications.

usage: yarn logs -applicationId <application ID> [OPTIONS]

general options are:

-appOwner <Application Owner> AppOwner (assumed to be current user if

not specified)

-containerId <Container ID> ContainerId (must be specified if node

address is specified)

-nodeAddress <Node Address> NodeAddress in the format nodename:port

(must be specified if container id is

specified)

So, to print all the logs for a given application, one can simply say

yarn logs -applicationId <application ID>

On the other hand, if one wants to get the logs of only one container, the following works

yarn logs -applicationId <application ID> -containerId <Container ID> -nodeAddress <Node Address>

The obvious advantage with the command line utility is that now you can use the regular shell utils like grep, sort etc to filter out any specific information that one is looking for in the logs!

Administration

General log related configuration properties

- yarn.nodemanager.log-dirs: Determines where the container-logs are stored on the node when the containers are running. Default is ${yarn.log.dir}/userlogs.

- An application’s localized log directory will be found in ${yarn.nodemanager.log-dirs}/application_${appid}.

- Individual containers’ log directories will be below this, in directories named container_{$containerId}.

- For MapReduce applications, each container directory will contain the files stderr, stdin, and syslog generated by that container.

- Other frameworks can choose to write more or less files, YARN doesn’t dictate the file-names and number of files.

- yarn.log-aggregation-enable: Whether to enable log aggregation or not. If disabled, NMs will keep the logs locally (like in 1.x) and not aggregate them.

Properties respected when log-aggregation is enabled

- yarn.nodemanager.remote-app-log-dir: This is on the default file-system, usually HDFS and indictes where the NMs should aggregate logs to. This should not be local file-system, otherwise serving daemons like history-server will not able to serve the aggregated logs. Default is /tmp/logs.

- yarn.nodemanager.remote-app-log-dir-suffix: The remote log dir will be created at {yarn.nodemanager.remote-app-log-dir}/${user}/{thisParam}. Default value is “logs”".

- yarn.log-aggregation.retain-seconds: How long to wait before deleting aggregated-logs, -1 or a negative number disables the deletion of aggregated-logs. One needs to be careful and not set this to a too small a value so as to not burden the distributed file-system.

- yarn.log-aggregation.retain-check-interval-seconds: Determines how long to wait between aggregated-log retention-checks. If it is set to 0 or a negative value, then the value is computed as one-tenth of the aggregated-log retention-time. As with the previous configuration property, one needs to be careful and not set this to low values. Defaults to -1.

- yarn.log.server.url: Once an application is done, NMs redirect web UI users to this URL where aggregated-logs are served. Today it points to the MapReduce specific JobHistory.

Properties respected when log-aggregation is disabled

- yarn.nodemanager.log.retain-seconds: Time in seconds to retain user logs on the individual nodes if log aggregation is disabled. Default is 10800.

- yarn.nodemanager.log.deletion-threads-count: Determines the number of threads used by the NodeManagers to clean-up logs once the log-retention time is hit for local log files when aggregation is disabled.

Other setup instructions

- The remote root log directory is expected to have the permissions 1777 with ${NMUser} and directory and group-owned by ${NMGroup} – group to which NMUser belongs.

- Each application level dir will be created with permission 770, but user-owned by the application-submitter and group owned by ${NMGroup}. This is so that the application-submitter can access aggregated-logs for his own sake and ${NMUser} can access or modify the files for log management.

- ${NMGroup} should be a limited access group so that there are no access leaks.

Conclusion

In this post, I’ve described the motivations for implementing log-aggregation and how it looks for the end user as well as the administrators. Log-aggregation proved to be a very useful feature so far. There are interesting design decisions that we made and some unsolved challenges with the existing implementation – topics for a future post.

The post Simplifying user-logs management and access in YARN appeared first on Hortonworks.

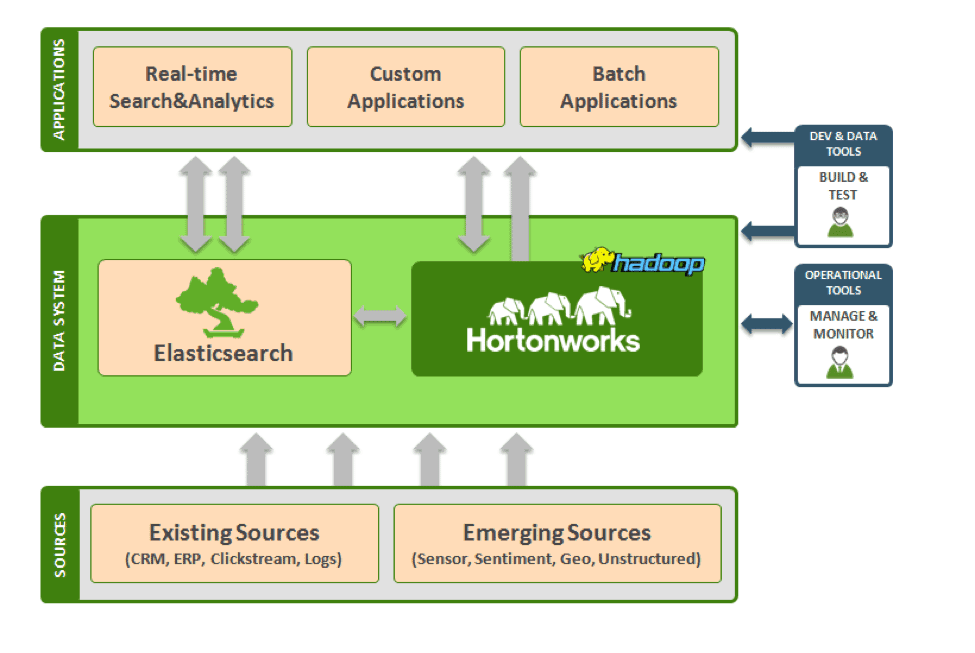

We believe the fastest path to innovation is the open community and we work hard to help deliver this innovation from the community to the enterprise. However, this is a two way street. We are also hearing very distinct requirements being voiced by the broad enterprise as they integrate Hadoop into their data architecture.

We believe the fastest path to innovation is the open community and we work hard to help deliver this innovation from the community to the enterprise. However, this is a two way street. We are also hearing very distinct requirements being voiced by the broad enterprise as they integrate Hadoop into their data architecture.

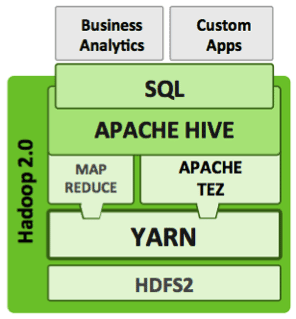

Tez is a low-level runtime engine not aimed directly at data analysts or data scientists. Frameworks need to be built on top of Tez to expose it to a broad audience… enter SQL and interactive query in Hadoop.

Tez is a low-level runtime engine not aimed directly at data analysts or data scientists. Frameworks need to be built on top of Tez to expose it to a broad audience… enter SQL and interactive query in Hadoop.